Zotonic is an open source framework for doing full-stack web development, all the way from frontend to backend. Consisting of a small set of core functionalities, it implements a lightweight but extensible Content Management System on top. Zotonic’s main goal is to make it easy to create well-performing websites “out of the box”, so that a website scales well from the start.

While it shares many features and functionalities with web development frameworks like Django, Drupal, Ruby on Rails and Wordpress, its main competitive advantage is the language that Zotonic is powered by: Erlang. This language, originally developed for building phone switches, allows Zotonic to be fault tolerant and have great performance characteristics.

Like the title says, this chapter focusses on the performance of Zotonic. We’ll look at the reasons why Erlang was chosen as the programming platform, then inspect the HTTP request stack, then dive in to the caching strategies that Zotonic employs. Finally, we'll describe the optimisations we applied to Zotonic’s submodules and the database.

The first work on Zotonic was started in 2008, and, like many projects, came from “scratching an itch”. Marc Worrell, the main Zotonic architect, had been working for seven years at Mediamatic Lab, in Amsterdam, on a Drupal-like CMS written in PHP/MySQL called Anymeta. Anymeta’s main paradigm was that it implemented a “pragmatic approach to the Semantic Web” by modeling everything in the system as generic “things”. Though successful, its implementations suffered from scalability problems.

After Marc left Mediamatic, he spent a few months designing a proper, Anymeta-like CMS from scratch. The main design goals for Zotonic were that it had to be easy to use for frontend developers; it had to support easy development of real-time web interfaces, simultaneously allowing long-lived connections and many short requests; and it had to have well-defined performance characteristics. More importantly, it had to solve the most common problems that limited performance in earlier Web development approaches–for example, it had to withstand the "Shashdot Effect" (a sudden rush of visitors).

A classic PHP setup runs as a module inside a container web server like Apache. On each request, Apache decides how to handle the request. When it’s a PHP request, it spins up mod_php5, and then the PHP interpreter starts interpreting the script. This comes with startup latency: typically, such a spin-up already takes 5 ms, and then the PHP code still needs to run. This problem can partially be mitigated by using PHP accelerators which precompile the PHP script, bypassing the interpreter. The PHP startup overhead can also be mitigated by using a process manager like PHP-FPM.

Nevertheless, systems like that still suffer from a shared nothing architecture. When a script needs a database connection, it needs to create one itself. Same goes any other I/O resource that could otherwise be shared between requests. Various modules feature persistent connections to overcome this, but there is no general solution to this problem in PHP.

Handling long-lived client connections is also hard because such connections need a separate web server thread or process for every request. In the case of Apache and PHP-FPM, this does not scale with many concurrent long-lived connections.

Modern web frameworks typically deal with three classes of HTTP request. First, there are dynamically generated pages: dynamically served, usually generated by a template processor. Second, there is static content: small and large files which do not change (e.g., JavaScript, CSS, and media assets). Third, there are long-lived connections: WebSockets and long-polling requests for adding interactivity and two-way communication to pages.

Before creating Zotonic, we were looking for a software framework and programming language that would allow us to meet our design goals (high performance, developer friendliness) and sidestep the bottlenecks associated with traditional web server systems. Ideally the software would meet the following requirements.

To our knowledge, Erlang was the only language that met these requirements “out of the box”. The Erlang VM, combined with its Open Telecom Platform (OTP), provided the system that gave and continues to give us all the necessary features.

Erlang is a (mostly) functional programming language and runtime system. Erlang/OTP applications were originally developed for telephone switches, and are known for their fault-tolerance and their concurrent nature. Erlang employs an actor-based concurrency model: each actor is a lightweight “process” (green thread) and the only way to share state between processes is to pass messages. The Open Telecom Platform is the set of standard Erlang libraries which enable fault tolerance and process supervision, amongst others.

Fault tolerance is at the core of its programming paradigm: let it crash is the main philosophy of the system. As processes don’t share any state (to share state, they must send messages to each other), their state is isolated from other processes. As such, a single crashing process will never take down the system. When a process crashes, its supervisor process can decide to restart it.

Let it crash also allows you to program for the happy case. Using pattern matching and function guards to assure a sane state means less error handling code is needed, which usually results in clean, concise, and readable code.

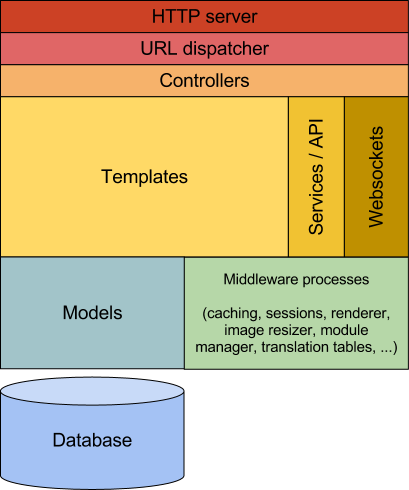

Before we discuss Zotonic’s performance optimizations, let’s have a look at its architecture. Figure 9.1 describes Zotonic's most important components.

Figure 9.1 - The architecture of Zotonic

The diagram shows the layers of Zotonic that an HTTP request goes through. For discussing performance issues we’ll need to know what these layers are, and how they affect performance.

First, Zotonic comes with a built in web server, Mochiweb (another Erlang project). It does not require an external web server. This keeps the deployment dependencies to a minimum.1

Like many web frameworks, a URL routing system is used to match requests to controllers. Controllers handle each request in a RESTful way, thanks to the Webmachine library.

Controllers are "dumb" on purpose, without much application-specific logic. Zotonic provides a number of standard controllers which, for the development of basic web applications, are often good enough. For instance, there is a controller_template, whose sole purpose it is to reply to HTTP GET requests by rendering a given template.

The template language is an Erlang-implementation of the well-known Django Template Language, called ErlyDTL. The general principle in Zotonic is that the templates drive the data requests. The templates decide which data they need, and retrieve it from the models.

Models expose functions to retrieve data from various data sources, like a database. Models expose an API to the templates, dictating how they can be used. The models are also responsible for caching their results in memory; they decide when and what is cached and for how long. When templates need data, they call a model as if it were a globally available variable.

A model is an Erlang wrapper module which is responsible for certain data. It contains the necessary functions to retrieve and store data in the way that the application needs. For instance, the central model of Zotonic is called m.rsc, which provide access to the generic resource (“page”) data model. Since resources use the database, m_rsc.erl uses a database connection to retrieve its data and pass it through to the template, caching it whenever it can.

This “templates drive the data” approach is different from other web frameworks like Rails and Django, which usually follow a more classical MVC approach where a controller assigns data to a template. Zotonic follows a less “controller-centric” approach, so that typical websites can be built by just writing templates.

Zotonic uses PostgreSQL for data persistence. Data Model: a Document Database in SQL explains the rationale for this choice.

While the main focus of this chapter are the performance characteristics of the web request stack, it is useful to know some of the other concepts that are at the heart of Zotonic.

mod_backup module adds version control to the page editor and also runs a daily full database backup. Another module, mod_github, exposes a webhook which pulls, rebuilds and reloads a Zotonic site from github, allowing for continuous deployment.

To enable the loose coupling and extensibility of code, communication between modules and core components is done by a notification mechanism which functions either as a map or fold over the observers of a certain named notification. By listening to notifications it becomes easy for a module to override or augment certain behaviour. The calling function decides whether a map or fold is used. For instance, the admin_menu notification is a fold over the modules which allow modules to add or remove menu items in the admin menu.

The main data model that Zotonic uses can be compared to Drupal’s Node module; “every thing is a thing”. The data model consists of hierarchically categorized resources which connect to other resources using labelled edges. Like its source of inspiration, the Anymeta CMS, this data model is loosely based on the principles of the Semantic Web.

Zotonic is an extensible system, and all parts of the system add up when you consider performance. For instance, you might add a module that intercepts web requests, and does something on each request. Such a module might impact the performance of the entire system. In this chapter we’ll leave this out of consideration, and instead focus on the core performance issues.

Most web sites live an unexceptional life in a small place somewhere on the web. That is, until one of their pages hit the front page of a popular website like CNN, BBC or Yahoo. In that case, the traffic to the website will likely increase to tens, hundreds, or even thousands of page requests per second in no time.

Such a sudden surge overloads a traditional web server and makes it unreachable. The term "Slashdot Effect" was named after the web site that started this kind of overwhelming referrals. Even worse, an overloaded server is sometimes very hard to restart. As the newly started server has empty caches, no database connections, often un-compiled templates, etc.

Many anonymous visitors requesting exactly the same page around the same time shouldn’t be able to overload a server. This problem is easily solved using a caching proxy like Varnish, which caches a static copy of the page and only checks for updates to the page once in a while.

A surge of traffic becomes more challenging when serving dynamic pages for every single visitor; these can't be cached. With Zotonic, we set out to solve this problem.

We realized that most web sites have

and decided to

Why fetch data from an external source (database, memcached) when another request fetched it already a couple of milliseconds ago? We always cache simple data requests. In the next section the caching mechanism is discussed in detail.

When rendering a page or included template, a developer can add optional caching directives. This caches the rendered result for a period of time.

Caching starts what we called the memo functionality: while the template is being rendered and one or more processes request the same rendering, the later processes will be suspended. When the rendering is done all waiting processes will be sent the rendering result

The memoization alone–without any further caching–gives a large performance boost by drastically reducing the amount of parallel template processing.

Zotonic introduces several bottlenecks on purpose. These bottlenecks limit the access to processes that use limited resources or are expensive (in terms of CPU or memory) to perform. Bottlenecks are currently set up for the template compiler, the image resizing process, and the database connection pool.

The bottlenecks are implemented by having a limited worker pool for performing the requested action. For CPU or disk intensive work, like image resizing, there is only a single process handling the requests. Requesting processes post their request in the Erlang request queue for the process and wait until their request is handled. If a request times out it will just crash. Such a crashing request will return HTTP status 503 Service not available.

Waiting processes don’t use many resources and the bottlenecks protect against overload if a template is changed or an image on a hot page is replaced and needs cropping or resizing.

In short: a busy server can still dynamically update its templates, content and images without getting overloaded. At the same time it allows single requests to crash while the system itself continues to operate.

One more word on database connections. In Zotonic a process fetches a database connection from a pool of connections for every single query or transaction. This enables many concurrent processes to share a very limited number of database connections. Compare this with most (PHP) systems where every request holds a connection to the database for the duration of the complete request.

Zotonic closes unused database connections after a time of inactivity. One connection is always left open so that the system can always handle an incoming request or background activity quickly. The dynamic connection pool drastically reduces the number of open database connections on most Zotonic web sites to one or two.

The hardest part of caching is cache invalidation: keeping the cached data fresh and purging stale data. Zotonic uses a central caching mechanism with dependency checks to solve this problem.

This section describes Zotonic’s caching mechanism in a top-down fashion: from the browser down through the stack to the database.

The client-side caching is done by the browser. The browser caches images, CSS and JavaScript files. Zotonic does not allow client-side caching of HTML pages, it always dynamically generates all pages. Because it is very efficient in doing so (as described in the previous section) and not caching HTML pages prevents showing old pages after users log in, log out, or comments are placed.

Zotonic improves client-side performance in two ways:

The first is done by adding the appropriate HTTP headers to the request2:

Last-Modified: Tue, 18 Dec 2012 20:32:56 GMT

Expires: Sun, 01 Jan 2023 14:55:37 GMT

Date: Thu, 03 Jan 2013 14:55:37 GMT

Cache-Control: public, max-age=315360000Multiple CSS or JavaScript files are concatenated into a single file, separating individual files by a tilde and only mentioning paths if they change between files:

http://example.org/lib/bootstrap/css/bootstrap

~bootstrap-responsive~bootstrap-base-site~

/css/jquery.loadmask~z.growl~z.modal~site~63523081976.cssThe number at the end is a timestamp of the newest file in the list. The necessary CSS link or JavaScript script tag is generated using the {% lib %} template tag.

Zotonic is a large system, and many parts in it do caching in some way. The sections below explain some of the more interesting parts.

The controller handling the static files has some optimizations for handling these files. It can decompose combined file requests into a list of individual files.

The controller has checks for the If-Modified-Since header, serving the HTTP status 304 Not Modified when appropriate.

On the first request it will concatenate the contents of all the static files into one byte array (an Erlang binary).3 This byte array is then cached in the central depcache (see Depcache) in two forms: compressed (with gzip) and uncompressed. Depending on the Accept-Encoding headers sent by the browser, Zotonic will serve either the compressed or uncompressed version.

This caching mechanism is efficient enough that its performance is similar to many caching proxies, while still fully controlled by the web server. With an earlier version of Zotonic and on simple hardware (quad core 2.4 GHz Xeon from 2008) we saw throughputs of around 6000 requests/second and were able to saturate a gigabit ethernet connection requesting a small (~20 KB) image file.

Templates are compiled into Erlang modules, after which the byte code is kept in memory. Compiled templates are called as regular Erlang functions.

The template system detects any changes to templates and will recompile the template during runtime. When compilation is finished Erlang’s hot code upgrade mechanism is used to load the newly compiled Erlang module.

The main page and template controllers have options to cache the template rendering result. Caching can also be enabled only for anonymous (not logged in) visitors. As for most websites, anonymous visitors generate the bulk of all requests and those pages will be not be personalized and (almost) be identical. Note that template rendering results is an intermediate result and not the final HTML. This intermediate result contains (among others) untranslated strings and JavaScript fragments. The final HTML is generated by parsing this intermediate structure, picking the correct translations and collecting all javascript.

The concatenated JavaScript, along with a unique page ID, is placed at the position of the {% script %} template tag. This should be just above the closing </body> body tag. The unique page ID is used to match this rendered page with the handling Erlang processes and for WebSocket/Comet interaction on the page.

Like with any template language, templates can include other templates. In Zotonic, included templates are usually compiled inline to eliminate any performance lost by using included files.

Special options can force runtime inclusion. One of those options is caching. Caching can be enabled for anonymous visitors only, a caching period can be set, and cache dependencies can be added. These cache dependencies are used to invalidate the cached rendering if any of the shown resources is changed.

Another method to cache parts of templates is to use the {% cache %} ... {% endcache %} block tag, which caches a part of a template for a given amount of time. This tag has the same caching options as the include tag, but has the advantage that it can easily be added in existing templates.

All caching is done in memory, in the Erlang VM itself. No communication between computers or operating system processes is needed to access the cached data. This greatly simplifies and optimizes the use of the cached data.

As a comparison, accessing a memcache server typically takes 0.5 milliseconds. In contrast, accessing main memory within the same process takes 1 nanoseconds on a CPU cache hit and 100 nanoseconds on a CPU cache miss–not to mention the huge speed difference between memory and network.4

Zotonic has two in-memory caching mechanisms:5

The central caching mechanism in every Zotonic site is the depcache, which is short for dependency cache. The depcache is an in-memory key-value store with a list of dependencies for every stored key.

For every key in the depcache we store:

If a key is requested then the cache checks if the key is present, not expired, and if the serial numbers of all the dependency keys are lower than serial number of the cached key. If the key was still valid its value is returned, otherwise the key and its value is removed from the cache and undefined is returned.

Alternatively if the key was being calculated then the requesting process would be added to the waiting list of the key.

The implementation makes use of ETS, the Erlang Term Storage, a standard hash table implementation which is part of the Erlang OTP distribution. The following ETS tables are created by Zotonic for the depcache:

#meta{key, expire, serial, deps}.The ETS tables are optimized for parallel reads and usually directly accessed by the calling process. This prevents any communication between the calling process and the depcache process.

The depcache process is called for:

The depcache can get quite large. To prevent it from growing too large there is a garbage collector process. The garbage collector slowly iterates over the complete depcache, evicting expired or invalidated keys. If the depcache size is above a certain threshold (100 MiB by default) then the garbage collector speeds up and evicts 10% of all encountered items. It keeps evicting until the cache is below its threshold size.

100 MiB might sound small in this area of multi-TB databases. However, as the cache mostly contains textual data it will be big enough to contain the hot data for most web sites. Otherwise the size of the cache can be changed in configuration.

The other memory-caching paradigm in Zotonic is the process dictionary memo cache. As described earlier, the data access patterns are dictated by the templates. The caching system uses simple heuristics to optimize access to data.

Important in this optimization is data caching in the Erlang process dictionary of the process handling the request. The process dictionary is a simple key-value store in the same heap as the process. Basically, it adds state to the functional Erlang language. Use of the process dictionary is usually frowned upon for this reason, but for in-process caching it is useful.

When a resource is accessed (remember, a resource is the central data unit of Zotonic), it is copied into the process dictionary. The same is done for computational results–like access control checks–and other data like configuration values.

Every property of a resource–like its title, summary or body text–must, when shown on a page, perform an access control check and then fetch the requested property from the resource. Caching all the resource’s properties and its access checks greatly speeds up resource data usage and removes many drawbacks of the hard-to-predict data access patterns by templates.

As a page or process can use a lot of data this memo cache has a couple of pressure valves:

$ancestors are kept.When the memo cache is disabled then every lookup is handled by the depcache. This results in a call to the depcache process and data copying between the depcache and the requesting process.

The Erlang Virtual Machine has a few properties that are important when looking at performance.

The Erlang VM is specifically designed to do many things in parallel, and as such has its own implementation of multiprocessing within the VM. Erlang processes are scheduled on a reduction count basis, where one reduction is roughly equivalent to a function call. A process is allowed to run until it pauses to wait for input (a message from some other process) or until it has executed a fixed number of reductions. For each CPU core, a scheduler is started with its own run queue. It is not uncommon for Erlang applications to have thousands to millions of processes alive in the VM at any given point in time.

Processes are not only cheap to start but also cheap in memory at 327 words per process, which amounts to ~2.5 KiB on a 64 bit machine.6 This compares to ~500 KiB for Java and a default of 2 MiB for pthreads.

Since processes are so cheap to use, any processing that is not needed for a request’s result is spawned off into a separate process. Sending an email or logging are both examples of tasks that could be handled by separate processes.

In the Erlang VM messages between processes are relatively expensive, as the message is copied in the process. This copying is needed due to Erlang’s per-process garbage collector. Preventing data copying is important; which is why Zotonic’s depcache uses ETS tables, which can be accessed from any process.

There is a big exception for copying data between processes. Byte arrays larger than 64 bytes are not copied between processes. They have their own heap and are separately garbage collected.

This makes it cheap to send a big byte array between processes, as only a reference to the byte array is copied. However, it does make garbage collection harder, as all references must be garbage collected before the byte array can be freed.

Sometimes, references to parts of a big byte array are passed: the bigger byte array can’t be garbage collected until the reference to the smaller part is garbage collected. A consequence is that copying a byte array is an optimization if that frees up the bigger byte array.

String processing in any functional language can be expensive because strings are often represented as linked lists of integers, and, due to the functional nature of Erlang, data cannot be destructively updated.

If a string is represented as a list, then it is processed using tail recursive functions and pattern matching. This makes it a natural fit for functional languages. The problem is that the data representation of a linked list has a big overhead and that messaging a list to another process always involves copying the full data structure. This makes a list a non-optimal choice for strings.

Erlang has its own middle-of-the-road answer to strings: io-lists. Io-lists are nested lists containing lists, integers (single byte value), byte arrays and references to parts of other byte arrays. Io-lists are extremely easy to use and appending, prefixing or inserting data is inexpensive, as they only need changes to relatively short lists, without any data copying.7

An io-list can be sent as-is to a “port” (a file descriptor), which flattens the data structure to a byte stream and sends it to a socket.

Example of an io-list:

[ <<"Hello">>, 32, [ <<"Wo">>, [114, 108], <<"d">>].Which flattens to the byte array:

<<"Hello World">>.Interestingly, most string processing in a web application consists of:

Erlang’s io-list is the perfect data structure for the first use case. And the second use case is resolved by an aggressive sanitization of all content before it is stored in the database.

These two combined means that for Zotonic a rendered page is just a big concatenation of byte arrays and pre-sanitized values in a single io-list.

Zotonic makes heavy use of a relatively big data structure, the Context. This is a record containing all data needed for a request evaluation. It contains:

User-Agent class (e.g., text, phone, tablet, desktop)All this data can make a large data structure. Sending this large Context to different processes working on the request would result in a substantial data copying overhead.

That is why we try to do most of the request processing in a single process: the Mochiweb process that accepted the request. Additional modules and extensions are called using function calls instead of using inter-process messages.

Sometimes an extension is implemented using a separate process. In that case the extension provides a function accepting the Context and the process ID of the extension process. This interface function is then responsible of efficiently messaging the extension process.

Zotonic also needs to send a message when rendering cacheable sub-templates. In this case the Context is pruned of all intermediate template results and some other unneeded data (like logging information) before the Context is messaged to the process rendering the sub-template.

We don’t care too much about messaging byte arrays as they are, in most cases, larger than 64 bytes and as such will not be copied between processes.

For serving large static files, there is the option of using the Linux sendfile() system call to delegate sending the file to the operating system.

Webmachine is a library implementing an abstraction of the HTTP protocol. It is implemented on top of the Mochiweb library which implements the lower level HTTP handling, like acceptor processes, header parsing, etc.

Controllers are made by creating Erlang modules implementing callback functions. Examples of callback functions are resource_exists, previously_existed, authorized, allowed_methods, process_post, etc. Webmachine also matches request paths against a list of dispatch rules; assigning request arguments and selecting the correct controller for handling the HTTP request.

With Webmachine, handling the HTTP protocol becomes easy. We decided early on to build Zotonic on top of Webmachine for this reason.

While building Zotonic a couple of problems with Webmachine were encountered.

last_modified) are called multiple times during request evaluation.The first problem (no partitioning of dispatch rules) is only a nuisance. It makes the list of dispatch rules less intuitive and more difficult to interpret.

The second problem (copying the dispatch list for every request) turned out to be a show stopper for Zotonic. The lists could become so large that copying it could take the majority of time needed to handle a request.

The third problem (multiple calls to the same functions) forced controller writers to implement their own caching mechanisms, which is error prone.

The fourth problem (no log on crash) makes it harder to see problems when in production.

The fifth problem (no HTTP Upgrade) prevents us from using the nice abstractions available in Webmachine for WebSocket connections.

The above problems were so serious that we had to modify Webmachine for our own purposes.

First a new option was added: dispatcher. A dispatcher is a module implementing the dispatch/3 function which matches a request to a dispatch list. The dispatcher also selects the correct site (virtual host) using the HTTP Host header. When testing a simple “hello world” controller, these changes gave a threefold increase of throughput. We also observed that the gain was much higher on systems with many virtual hosts and dispatch rules.

Webmachine maintains two data structures, one for the request data and one for the internal request processing state. These data structures were referring to each other and actually were almost always used in tandem, so we combined them in a single data structure. Which made it easier to remove the use of the process dictionary and add the new single data structure as an argument to all functions inside Webmachine. This resulted in 20% less processing time per request.

We optimized Webmachine in many other ways that we will not describe in detail here, but the most important points are:

charsets_provided, content_types_provided, encodings_provided, last_modified, and generate_etag).From a data perspective it is worth mentioning that all properties of a “resource” (Zotonic’s main data unit) are serialized into a binary blob; “real” database columns are only used for keys, querying and foreign key constraints.

Separate “pivot” fields and tables are added for properties, or combinations of properties that need indexing, like full text columns, date properties, etc.

When a resource is updated, a database trigger adds the resource’s ID to the pivot queue. This pivot queue is consumed by a separate Erlang background process which indexes batches of resources at a time in a single transaction.

Choosing SQL made it possible for us to hit the ground running: PostgreSQL has a well known query language, great stability, known performance, excellent tools, and both commercial and non-commercial support.

Beyond that, the database is not the limiting performance factor in Zotonic. If a query becomes the bottleneck, then it is the task of the developer to optimize that particular query using the database’s query analyzer.

Finally, the golden performance rule for working with any database is: Don’t hit the database; don’t hit the disk; don’t hit the network; hit your cache.

We don’t believe too much in benchmarks as they often test only minimal parts of a system and don’t represent the performance of the whole system. Especially as a system has many moving parts and in Zotonic the caching system and handling common access patterns are an integral part of the design.

What a benchmark might do is show where you could optimize the system first.

With this in mind we benchmarked Zotonic using the TechEmpower JSON benchmark, which is basically testing the request dispatcher, JSON encoder, HTTP request handling and the TCP/IP stack.

The benchmark was performed on a Intel i7 quad core M620 @ 2.67 GHz. The command was wrk -c 3000 -t 3000 http://localhost:8080/json. The results are shown in Table 9.1.

| Platform | x1000 Requests/sec |

|---|---|

| Node.js | 27 |

| Cowboy (/Erlang) | 31 |

| Elli (/Erlang) | 38 |

| Zotonic | 5.5 |

| Zotonic w/o access log | 7.5 |

| Zotonic w/o access log, with dispatcher pool | 8.5 |

Zotonic’s dynamic dispatcher and HTTP protocol abstraction gives lower scores in such a micro benchmark. Those are relatively easy to solve, and the solutions were already planned:

For the 2013 abdication of the Dutch queen and subsequent inauguration of the new Dutch king a national voting site was built using Zotonic. The client requested 100% availability and high performance, being able to handle 100,000 votes per hour.

The solution was a system with four virtual servers, each with 2 GB RAM and running their own independent Zotonic system. Three nodes handled voting, one node was for administration. All nodes were independent but the voting nodes shared every vote with the at least two other nodes, so no vote would be lost if a node crashed.

A single vote gave ~30 HTTP requests for dynamic HTML (in multiple languages), Ajax, and static assets like css and javascript. Multiple requests were needed for selecting the three projects to vote on and filling in the details of the voter.

When tested we easily met the customer’s requirements without pushing the system to the max. The voting simulation was stopped at 500,000 complete voting procedures per hour, using bandwidth of around 400 mbps, and 99% of request handling times were below 200 milliseconds.

From the above it is clear that Zotonic can handle popular dynamic web sites. On real hardware we have observed much higher performance, especially for the underlying I/O and database performance.

When building a content management system or framework it is important to take the full stack of your application into consideration, from the web server, the request handling system, the caching systems, down to the database system. All parts must work well together for good performance.

Much performance can be gained by preprocessing data. An example of preprocessing is pre-escaping and sanitizing data before storing it into the database.

Caching hot data is a good strategy for web sites with a clear set of popular pages followed by a long tail of less popular pages. Placing this cache in the same memory space as the request handling code gives a clear edge over using separate caching servers, both in speed and simplicity.

Another optimization for handling sudden bursts in popularity is to dynamically match similar requests and process them once for the same result. When this is well implemented, a proxy can be avoided and all HTML pages generated dynamically.

Erlang is a great match for building dynamic web based systems due to its lightweight multiprocessing, failure handling, and memory management.

Using Erlang, Zotonic makes it possible to build a very competent and well-performing content management system and framework without needing separate web servers, caching proxies, memcache servers, or e-mail handlers. This greatly simplifies system management tasks.

On current hardware a single Zotonic server can handle thousands of dynamic page requests per second, thus easily serving the fast majority of web sites on the world wide web.

Using Erlang, Zotonic is prepared for the future of multi-core systems with dozens of cores and many gigabytes of memory.

The authors would like to thank Michiel Klønhammer (Maximonster Interactive Things), Andreas Stenius, Maas-Maarten Zeeman and Atilla Erdődi.

However, it is possible to put another web server in front, for example when other web systems are running on the same server. But for normal cases, this is not needed. It is interesting that a typical optimisation that other frameworks use is to put a caching web server such as Varnish in front of their application server for serving static files, but for Zotonic this does not speed up those requests significantly, as Zotonic also caches static files in memory.).↩

Note that Zotonic does not set an ETag. Some browsers check the ETag for every use of the file by making a request to the server. Which defies the whole idea of caching and making fewer requests.↩

A byte array, or binary, is a native Erlang data type. If it is smaller than 64 bytes it is copied between processes, larger ones are shared between processes. Erlang also shares parts of byte arrays between processes with references to those parts and not copying the data itself, thus making these byte arrays an efficient and easy to use data type.↩

See "Latency Numbers Every Programmer Should Know" at http://www.eecs.berkeley.edu/~rcs/research/interactive_latency.html.↩

In addition to these mechanisms, the database server performs some in-memory caching, but that is not within the scope of this chapter.↩

See http://www.erlang.org/doc/efficiency_guide/advanced.html#id68921↩

Erlang can also share parts of a byte array with references to those parts, thus circumventing the need to copy that data. An insert into a byte array can be represented by an io-list of three parts: a references to the unchanged head bytes, the inserted value, and a reference to the unchanged tail bytes.↩